The promise and the pitfalls of AI

Science and TechnologySUMMARY: JMU is assessing the AI landscape and developing local policies and practices in the areas of scholarship, the student experience, societal impact and administrative applications.

Game-changing new technologies inevitably raise red flags. For every touted advancement in knowledge, efficiencies and quality of life, concerns follow about the loss of privacy, security and jobs.

At JMU, the promise of artificial intelligence systems — in the classroom, in our community and in the world beyond campus — is being carefully weighed against the potential pitfalls.

President Jonathan R. Alger has created the Task Force on Artificial Intelligence, which is meant to inform and expand on current conversations around generative AI. The task force is comprised of four working groups that will assess the current landscape and develop local policies and practices in the areas of scholarship, the student experience, societal impact and administrative applications.

“With the help and guidance of these working groups, JMU will be able to address both short-term and long-term generative AI impacts, increase our responsiveness to recent technological and social developments, and be better prepared to support the faculty, staff and students of JMU as this technology continues to evolve and change.”

— President Jonathan R. Alger, in an email to faculty and staff

As ChatGPT and other generative AI tools become more common, professors at JMU and across the country are raising concerns about academic integrity.

This past spring, the Chronicle of Higher Education, a leading trade publication, asked faculty members at colleges and universities to share their experiences with ChatGPT. According to the results, which were published in June in the article “Caught Off Guard by AI,” some considered any use of AI to be cheating. Others reported having embraced ChatGPT in their teaching, arguing that they need to prepare their students for an AI-infused world. “Many faculty, though,” the article stated, “remain uncertain — willing to consider ways in which these programs could be of some value, but only if students fully understand how they operate.”

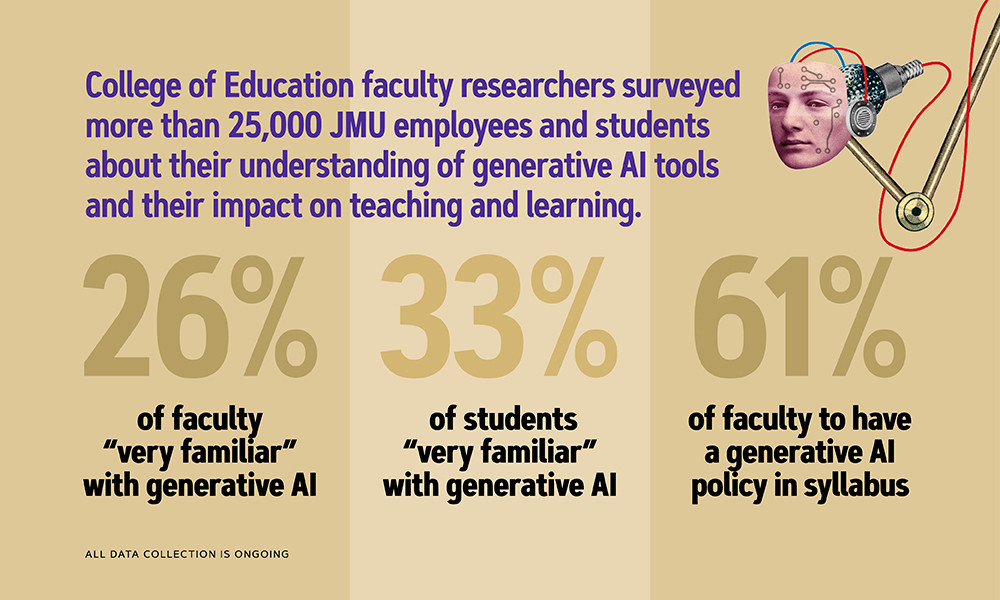

In August, a group of eight faculty researchers in the College of Education surveyed more than 25,000 JMU employees and students about their understanding of generative AI tools and their impact on teaching and learning. The survey covered a wide range of topics, including ethical awareness, concerns and the integration of generative AI tools in educational settings. Of the 129 faculty members who completed the survey, 26% reported being “very familiar” with generative AI, compared with 33% of the 227 student respondents. About an equal percentage of faculty members and students — 46% and 47% respectively — were “somewhat familiar” with the technology. More than half of the faculty and teaching staff (61%) indicated they would have a generative AI policy or guidance in their syllabus for the Fall 2023 or Spring 2024 semester.

|

“I have already initiated discussions in my courses regarding the ethical considerations surrounding AI, fostering critical thinking and evaluation skills, assessing the inclusivity and accessibility of AI-enhanced learning, and providing a comprehensive understanding of the capabilities and limitations of generative AI.” — Chelsey Bollinger, associate professor of early, elementary and reading education |

Over the summer, the interdisciplinary research team produced a set of guidelines for CoE faculty on implementing the technology in the classroom. “In addition to providing an introduction to generative AI and a recommended syllabus statement, we recognized the importance of creating a platform for our faculty members to exchange ideas and activities related to demonstrating the use of generative AI with students during their classes,” said Chelsey Bollinger, lead researcher. “We were aware that many outstanding educators within our college were already employing generative AI in innovative ways, and we were eager to learn from their experiences and insights.”

The goal, according to Bollinger, an associate professor of early, elementary and reading education, is to prepare future educators ethically.

“I have already initiated discussions in my courses regarding the ethical considerations surrounding AI, fostering critical thinking and evaluation skills, assessing the inclusivity and accessibility of AI-enhanced learning, and providing a comprehensive understanding of the capabilities and limitations of generative AI,” Bollinger said. “I firmly believe in the importance of setting a responsible example of AI use for my students.”

When ChatGPT launched last year, Jonathan Jones, an assistant professor of history, admitted to feeling a “knee-jerk impulse to ban it and forget about it.” How can we meaningfully teach historical skills like writing and argumentation, if our students can have ChatGPT draft an essay for them in seconds, he wondered. And how can we assess our students’ understanding of course content, if ChatGPT can conjure up instant answers to essay prompts with no learning required?

“Like many of my colleagues, I was deeply worried about its potential for academic dishonesty, and I still am,” Jones said. “But it was obvious that AI is here to stay.”

|

RELATED ARTICLE: |

Rather than give in to his concerns, Jones, then teaching at the Virginia Military Institute, created a classroom experiment to test ChatGPT’s ability to generate authentic, accurate historical essays. He asked it to write an essay on Frederick Douglass and the Civil War. He then shared the essay with students in his upper-level undergraduate history class, Frederick Douglass’s America, and asked them to review and edit it, and identify any factual errors.

The exercise proved “a smash hit, with terrific student engagement and clear payoff,” Jones wrote for Perspectives on History, the news magazine of the American Historical Association. “Students jumped at the chance to showcase the knowledge they had learned in class to fact-check the essay. Ultimately, they made a few deletions, some additions, and, most importantly, used their higher-order thinking skills to evaluate the essay and add much-needed context to its key points.”

JMU is currently exploring how AI can be integrated into some of its administrative functions, including its proposed Early Student Success System, part of the university’s Quality Enhancement Plan for the next decade. The system, which will involve data collection and analytics, is designed to improve overall student success and retention while closing the gaps that exist among first-generation and other disadvantaged student groups. One of the ideas on the table is a brief check-in survey during Week 2 through Week 4 of the academic semester to assess students’ basic needs, well-being, academics and sense of belonging. Working groups are thinking through the survey, as well as its administration, campus resource capacities, data workflow, and a communications plan for how students can opt in and out of data collection.

In August, more than 5,000 incoming JMU first-year and transfer students considered the following fictional scenario as part of Ethical Reasoning in Action, JMU’s pioneering program that provides a framework for ethical decision-making: Should a university provide student access to an artificially intelligent chatbot app, AI-Care, to supplement the growing demand for mental health services on campus?

The scenario was conceived by Christian Early, director of Ethical Reasoning in Action, following a tragic series of events in the JMU community in 2022, including the loss of a student-athlete to suicide and a non-student who jumped to their death from a parking garage on campus. “At the time, there was a big push for additional mental health resources in our community,” he said.

Early was especially interested in a solution that would lessen the demands on human counselors. “One of the only things I could think of was AI,” he said.

The Ethical Reasoning in Action staff produced a video to accompany the scenario that offered different perspectives on the issue using actors in the roles of administrators, professors, students and parents. This was prior to OpenAI’s launch of ChatGPT in November 2022, which stoked public fears about AI and its role in our daily lives. Suddenly, the idea of a chatbot counseling system on a college campus wasn’t so far-fetched.

|

“I wasn’t thinking of students writing papers. I was thinking of industry. Where would AI make a difference? And how do we go about thinking about that? Do we really want this change? Do we want students who are dependent on an app to feel better about themselves?” — Christian Early, director of Ethical Reasoning in Action |

“My mind went to, how do we generate scenarios in which an implementation of AI could conceivably make a big difference,” Early said, “and what kinds of questions should we ask ourselves before we consider that decision? I wasn’t thinking of students writing papers. I was thinking of industry. Where would AI make a difference? And how do we go about thinking about that? Do we really want this change? Do we want students who are dependent on an app to feel better about themselves?”

Early also wondered about the long-term consequences of such an app. “Let’s say you implement it, and it solves the problem of access to resources,” he said. “Does that then mean that in-person counseling is for the rich?” And would it further marginalize a subset of underprivileged students who may not have access to personal devices?

In fact, the proposed AI-Care app checked all the boxes for Ethical Reasoning in Action’s Eight Key Questions, which students can use to evaluate the ethical dimensions of a dilemma: fairness, outcomes, responsibilities, character, liberty, empathy, authority and rights.

The scenario was piloted in January with incoming transfer students in-person before moving to an online learning module with first-year students in August. After studying the 8KQ, participants were asked to evaluate the ethical dimensions of making chatbot counselors available to students.

Sarah Cheverton, state authorization and compliance officer for online and distance learning at JMU and a lecturer in the College of Business, has made ethical scenarios and the 8KQ a part of her Computer Information Systems class for the past few years. She asks students to consider whether, as members of a company’s board of directors, they should require that the company’s employees have a microchip the size of a grain of rice inserted in their hand to add value to the business process.

|

“What’s interesting is that the vast majority of [students], when they think about the Eight Key Questions and fairness and liberty and outcomes, will say, ‘Hmm, I don't think this is a good idea. Too risky. You’re taking people’s liberty away.’ But I always have somebody who’s like, ‘Yeah, I’m about making money. And that’s what we’re going to do.’” — Sarah Cheverton, state authorization and compliance officer for Online and Distance Learning at JMU and lecturer in the College of Business |

“You can imagine the concerns that raises for some people,” Cheverton said. “What’s interesting is that the vast majority of them, when they think about the Eight Key Questions and fairness and liberty and outcomes, will say, ‘Hmm, I don't think this is a good idea. Too risky. You’re taking people’s liberty away.’ But I always have somebody who’s like, ‘Yeah, I’m about making money. And that’s what we’re going to do.’”

Sam Rooker, a sophomore Intelligence Analysis major and Public Policy and Administration minor, presented at the Society for Ethics Across the Curriculum conference at JMU in early October on “The Use of Artificial Intelligence in Filmmaking: A New Tool or the Death of Creativity?” His presentation focused on policy alternatives to the 2023 Writers Guild of America strike and how AI can be used in filmmaking.

In May, Adobe Inc. announced it would integrate generative AI into its editing software and some of its filmmaking suites. “I found that the editing it was doing was flawless,” said Rooker, a cinema buff with an appreciation for filmmaking technology.

Both the Screen Actors Guild-American Federation of Television and Radio Artists and the Writers Guild of America are hoping that AI will stay out of the writers room, Rooker said. “But I don’t see that being a definitive option. Hollywood studios, I think, are going to see the effects that the writers strike is having, and they’re going to lean into AI rather than simply stop making movies. One of the policy alternatives I explored is writers using AI not as a crutch so much as a supporting element.”

In his first semester at JMU, Rooker, an Honors student, took a course with professor Phil Frana that posed ethical questions related to AI. He wrote a paper for the class on the feasibility of electing an artificially intelligent member of Congress. “That was a wild one,” he admitted. “A lot of the other students were opposed to the idea. But even the more conservative side of the issue asks, should we allow AI personhood rights?”

Last year, as a member of JMU’s speech team, Rooker delivered an analysis of Mary Shelley’s Frankenstein as a lens for AI. “It was a way to get the message out that maybe there is an opportunity here to empathize with a creature that we may not necessarily think of as AI, but that is ultimately an extension of humanity that is intelligent.” The speech earned Rooker the title of Novice Grand Champion at the American Forensic Association National Speech Tournament in Santa Ana, California, in April.

Rooker is currently co-writing a book chapter with Frana, his research adviser, for the Museum of Science Fiction’s publishing arm, and has started another research project exploring whether the starship delivery robots on campus are having an impact on how students view AI.

Rooker believes AI will play an increasingly significant role in his education at JMU.

“I think right now JMU is in a transition period with AI,” he said. “And I think more than ever, I’m going to get exposed to more of those ideas in my classes. And it’s not just going to be specialized courses, like with Dr. Frana. I really hope to bring some of that into the skills that I develop to take into the workforce with me.”